Nintendo’s Switch 2 launched on June 5th 2025 with Mario Kart World headlining the platform and on paper showcasing its new 4K60 + HDR output pipeline. That promise lands in a market where HDR is the consumer standard: analysts value the global HDR TV segment at ≈ US $150B in 2024 and project US $250 B by 2033. Consumer TVs with HDR functionality started shipping in 2015, and seeing that most consumers replace their TV every 6.4 years this means a high percentage console owners in 2025 now game on HDR capable screens.

Yet, as I’ll show in this article, Mario Kart World surfaces an industry-wide problem: SDR-first authoring with a last-minute tonemap hack ruins the experience. I’d figure that SDR games that masquerade as HDR, or “fake HDR” coined by some more incendiary YouTubers, would have been a trend left back in 2020, but here we are in 2025 with a new generation of consoles with a headliner game that still reduces color gamut to SDR, and has no more dynamic range than the SDR presentation. In this article I’ll show my evidence of why I think this game is a “fake HDR” title, and what developers can do to avoid this in the future.

I approach this critique with some experience. I led Dolby Vision for Games program on Xbox Series X|S, helping developers ship Dolby Vision masters on Godfall, Halo Infinite, and COD Warzone, and I’m consulting on more titles still under NDA. Those projects taught me that HDR excellence starts at the very first art review—not in the final weeks of polish.

Test Methodology

Here is my capture chain that I’m gathering this information with. If you think I’m doing something wrong I’d love to know.

Hardware & capture path

- Launch Model Nintendo Switch 2 over HDMI on the official dock to –>

- Blackmagic DeckLink 4K mini.

- Captured in BlackMagic Media Express (3.8.1) in ProRes 4444 on Mac OS (15.1) Mac Studio M1 Ultra

- Viewed on Asus ProArt PA27UCX mastering monitor (2,000 nits, Rec.2020 PQ, hardware calibrated)

- Davinci Resolve to analyze captures

How can you view this best at home?

Images posted on this site are in HDR in the AVIF format with the full rec.2020 PQ image data. It’s lossy, but should give a good representation of the HDR experience as long as you have a display that goes up to 1,000 nits to view the full dynamic range of the scene. You should have HDR enabled on your OS.

I recommend viewing this on a Macbook Pro laptop with the built-in Pro Display XDR, or a high end PC HDR monitor (Similar to the ProArt I’ve mentioned earlier, but there are many more displays of similar quality or better now), or an LG C4/G4 or greater TV in the Cinema mode picture preset.

Google Chrome and other Chromium based browsers seem to have the best viewing experience. Safari will be adding HDR image support later, but as of this writing, it’s not out of beta.

Capture Procedure

- Set Nintendo Switch 2 Console settings to 4k 60, HDR, limited range

- Closed the game if it was running already (Some titles on consoles set their HDR tonemap only once at startup, this bypasses any issues when swapping the OS mapping during testing)

- Opened the game from a clean start and Ran a single lap in Mario Bros. Circuit at 50CC

- Recorded using BlackMagic Media Express into ProRes 4444 Codec (This is a 16-bit 4:4:4 capture medium, however the source content is only 10-bpc)

- Recorded a lap pass utilizing steps 1-4. Each time changing the Switch 2 “Maximum Brightness” HDR calibration: 205 nits → 1,000 nits → 2,000 nits → 10,000 nits (I verified console UI allows the full PQ range utilizing Davinci Resolve).

- Captured gameplay, and analyzed in Davinci Resolve Waveform Monitor

Findings

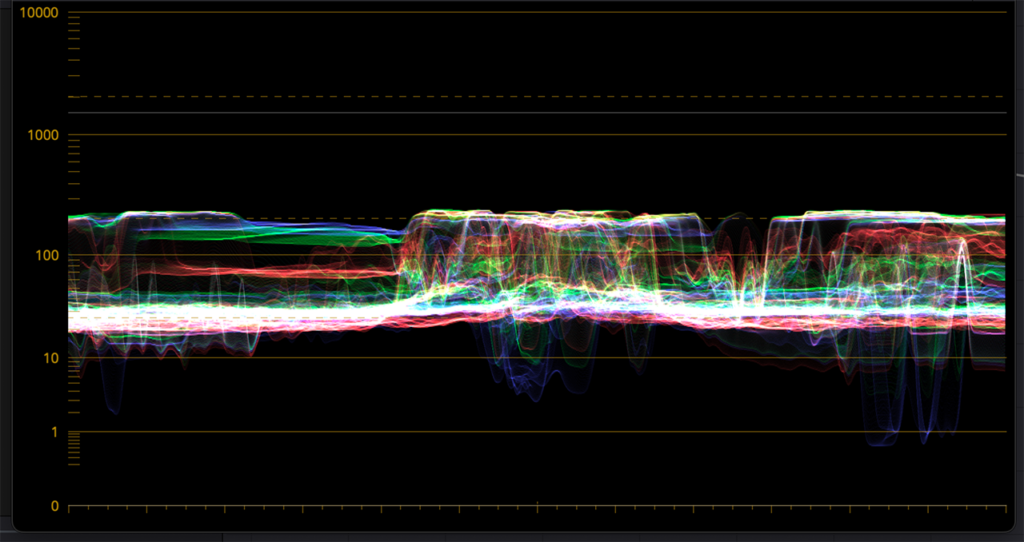

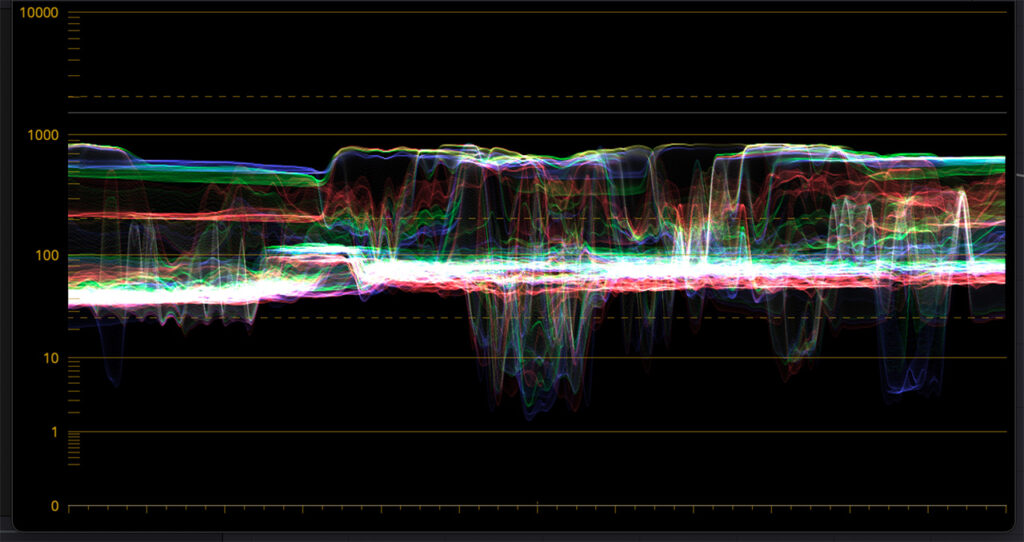

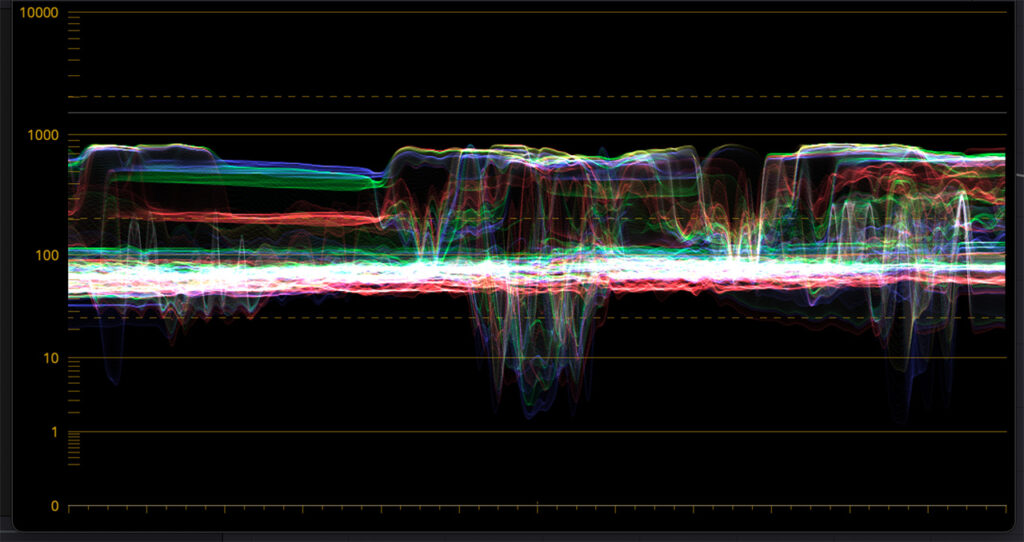

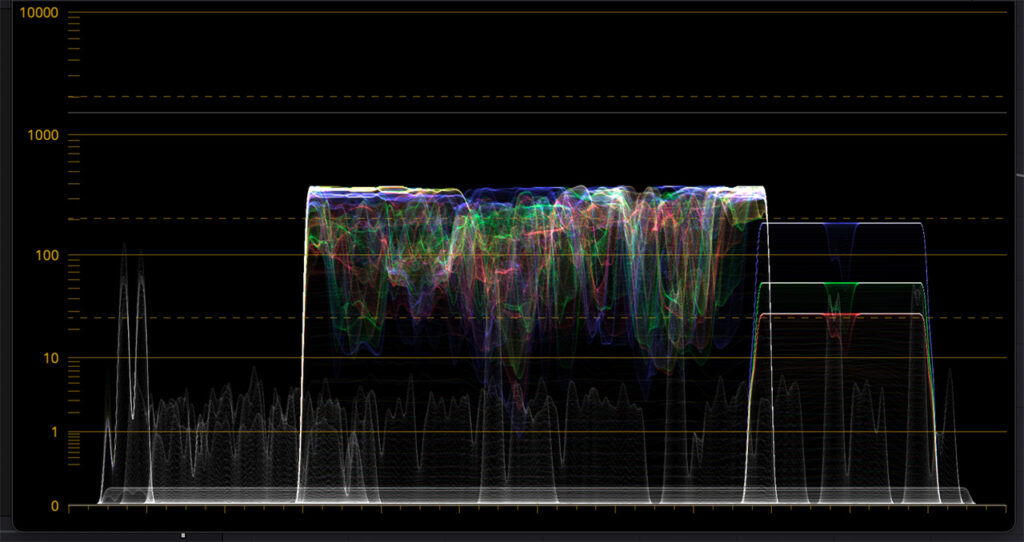

Static tone mapping and a clamped 1,000 nit ceiling

The game’s peak brightness at console 205 nits, is about 205 nits. This is behaving like it should

The game’s peak brightness at console 2,000 nits is about 900 nits. This is likely the intended behavior but makes the art look washed out as clouds do not have defined detail.

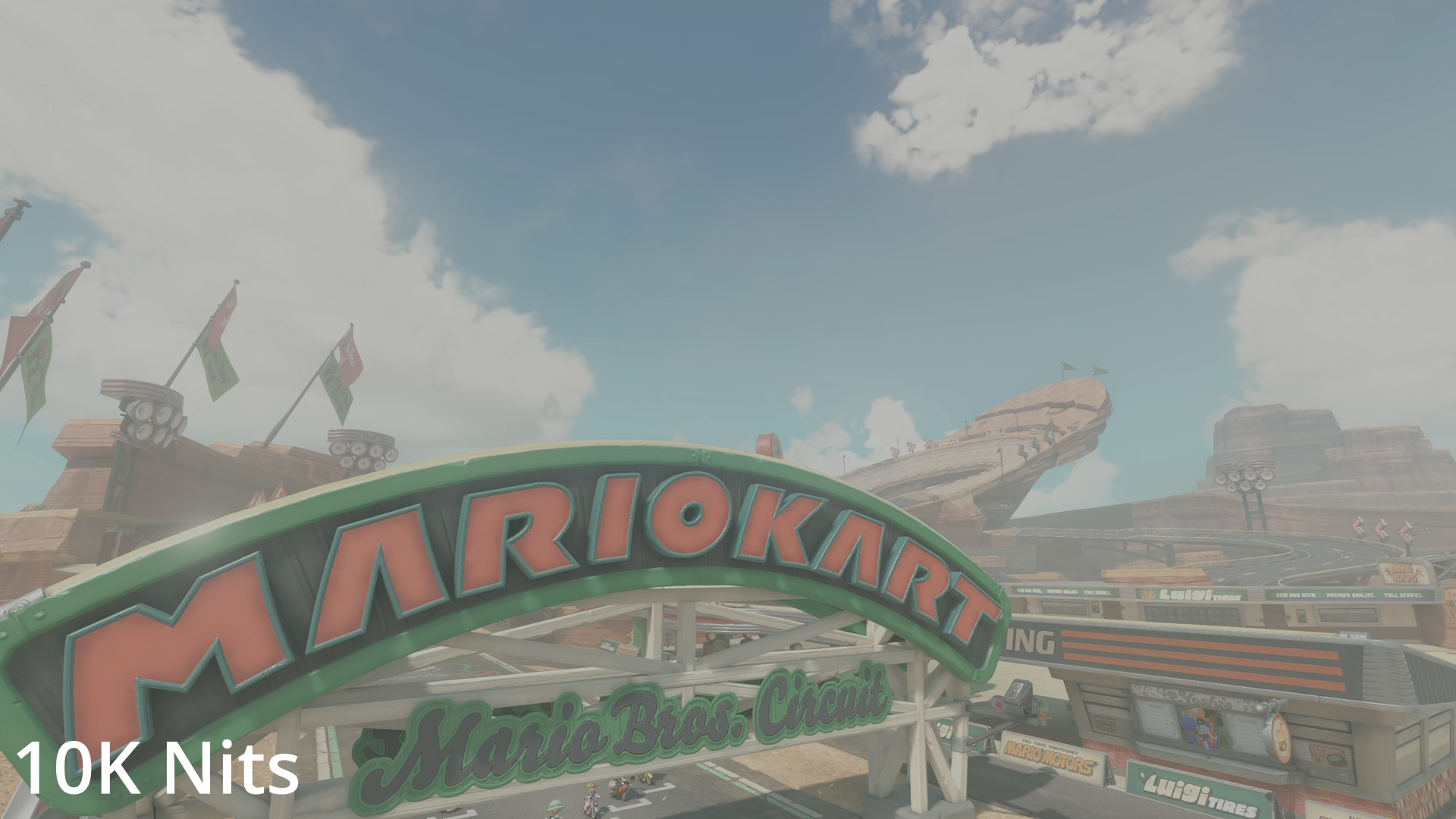

Even when the user cranks the console brightness to 10,000 nits, captured peaks in game never exceed ~950 nits

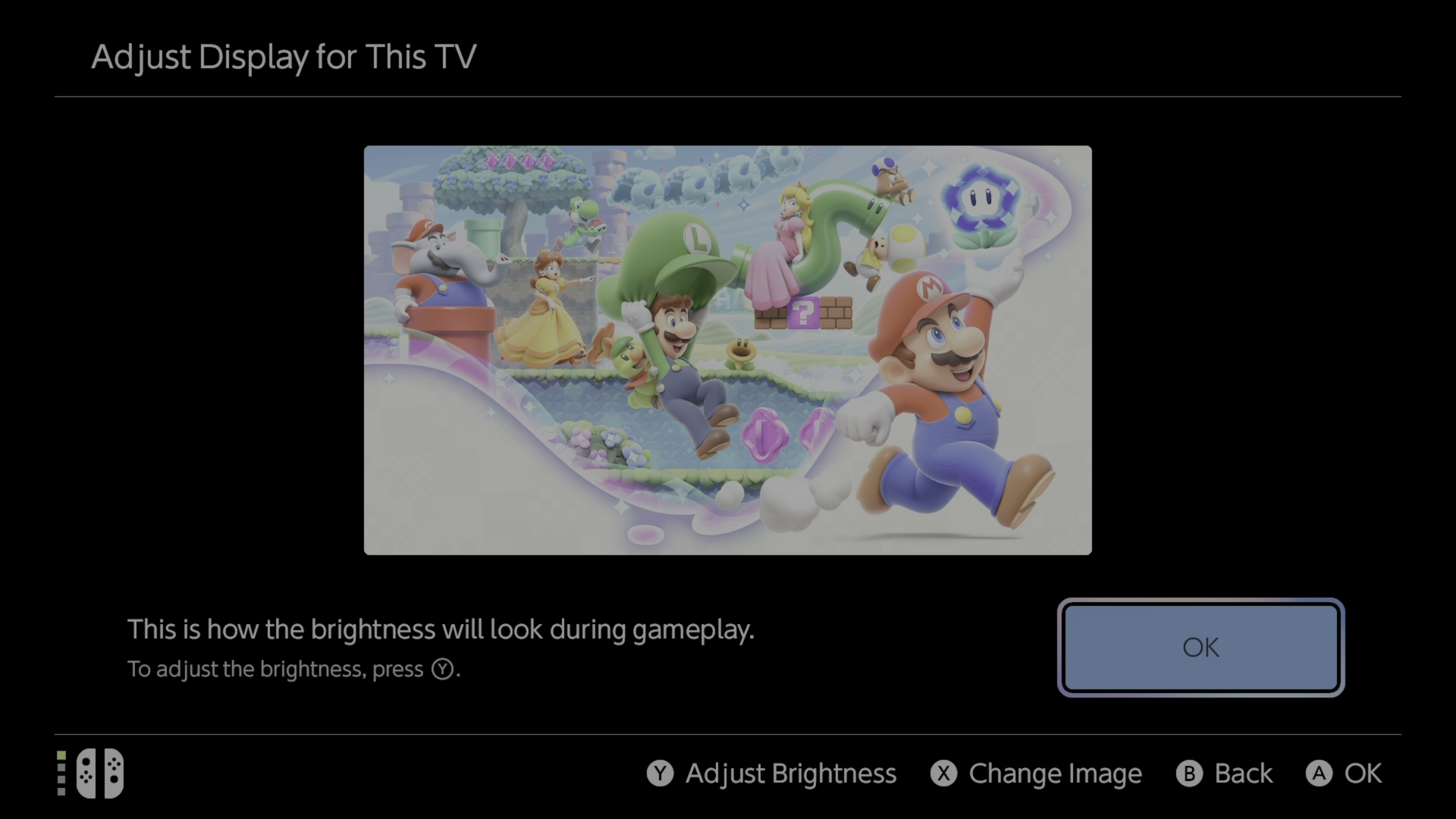

Nintendo’s own test image peaks at only ~500 nits even if you set 10,000 nits peak brightness. Not a good sign that they took HDR seriously.

I’m unable to peek into the math in how they are tonemapping from FP16 linear space to the Rec.2100 Display space, but from my testing I can safely assume they are using a single linear tonemap which scales some fixed function math to go from FP16 -> Rec.2100 with a scale factor from the console brightness setting.

It’s likely that the game’s creative intent is designed to only hit ~1,000 nits of brightness. This would be OK if they were limited in resources and could only get their hands on 1,000 nit peak brightness reference displays and needed to be conservative in their mastering. But why does the game not achieve 1,000 nits peak brightness when the Console max brightness is set to 2,000 or 10,000? This suggests an oversight in how their HDR tonemapper works, or they utilized scaling that is not dynamic.

Single Slope Tone Mapping

If the game’s true creative intent is to peak at 1,000 nits, then any console HDR brightness setting over 1,000 nits should have no effect on the games output. This suggests to me that the console peak brightness is just part of the equation they use to scale, and they aren’t considering their own creative intent for HDR.

It’s also possible that they didn’t have enough time to fine tune HDR and their creative intent was not achieved. Typically, with dynamically tonemapped games, extending the console max brightness results in seeing more detail and headroom for objects that are bright, like clouds and skies. In Mario Kart world, this scaling does nothing to reveal any more detail regardless of how the peak brightness setting for HDR is set.

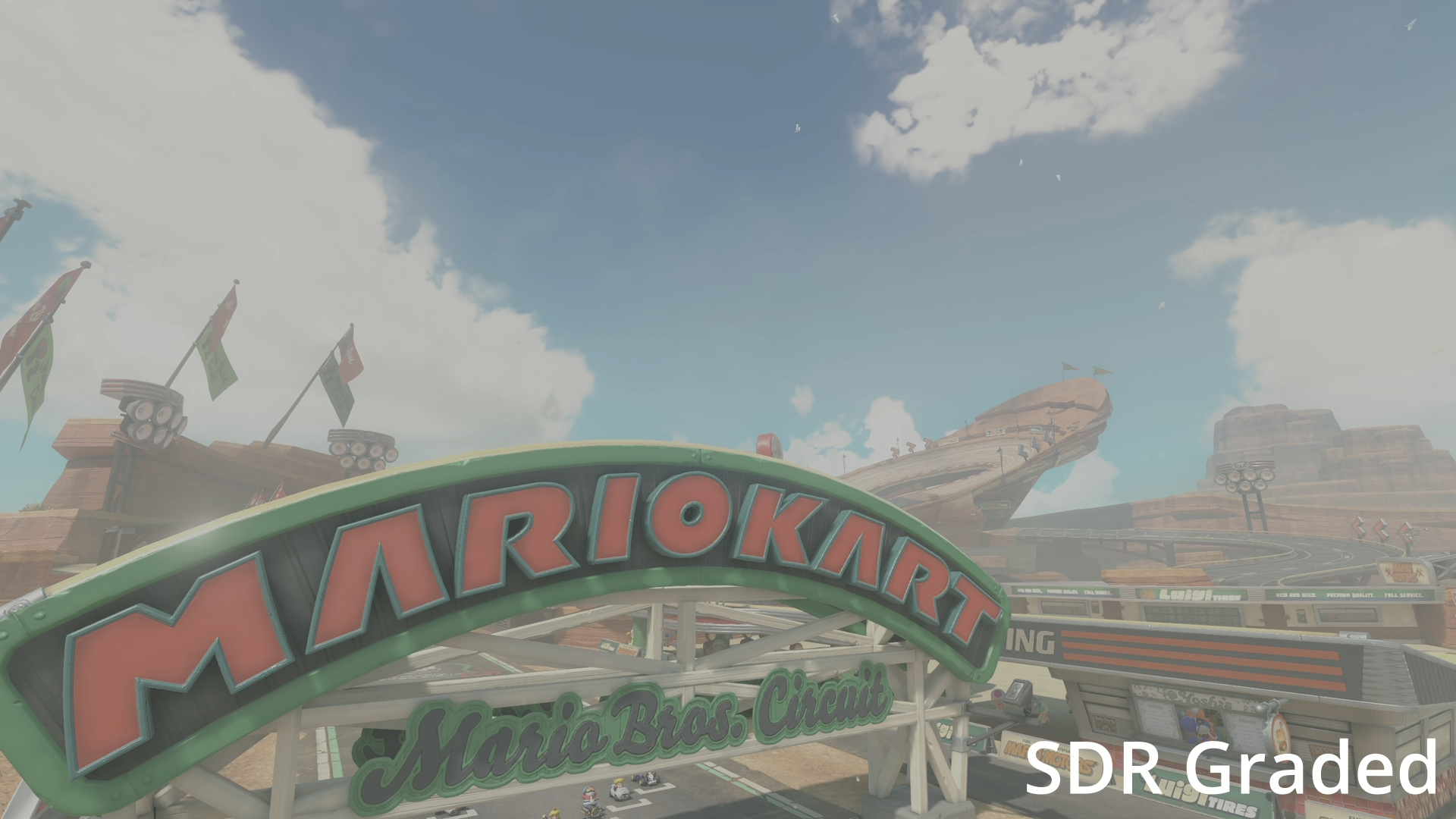

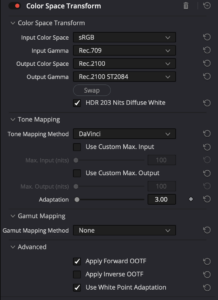

This is likely due to the game being art directed only for SDR. In SDR you typically have to make tradeoffs in how contrasty a scene can feel, between details in the darkest or brightest objects. SDR is limited in dynamic range, you have to give up something. The easiest way to solve this issue is to take your SDR frame buffer and extend it to HDR utilizing your own tone mapping function. Many developers under a time constraint build these in a tool called Davinci resolve, and export a LUT to save time on this transform. It’s not going to give you a true HDR representation, but it’s something a single rendering engineer can cook up and implement in a week that is just a brighter, stretched version of their approved and art directed SDR version of the game.

To test my theory, I can use Davinci Resolve’s Rec.709 to Rec.2020 PQ color space transform and dial in settings that give me nearly identical looks. The clouds seem to be dynamic so some sections may show shadows that aren’t there, but when I stretch the SDR into a 1,000 nit Rec.2100 image, I get an incredibly similar result.

Because I can get such a close match utilizing this method, confirms to me that there is one SDR master of the game, and they are extending it by utilizing tone mapper functions from the FP16 linear image into the Rec.2020 PQ space. Simply put, you’re getting an SDR image, stretched into HDR10 in Mario Kart World.

Wait, Why Do I See Banding In The Sky?

This is fully visible at 1:1 in the ProRes 4444 capture. I’ve zoomed in to showcase the banding on a smaller image and so that SDR users can see this as well.

Banding over the sky dome remains no matter if viewed in SDR, or HDR10. While this is minor, these issues are more visible in HDR where the image can appear brighter. This can happen due to lower bit-depth precision when blending this texture, or utilizing R9G9B9E5 as a back buffer to reduce memory bandwidth which requires extra care like dithering needed to cover up these transitions. While I can’t do external testing to determine the exact cause, It’s something an art team would likely catch and fix if they had done art reviews in HDR10, rather than sticking to SDR, where problems like this can be very hard to see, especially on smaller displays.

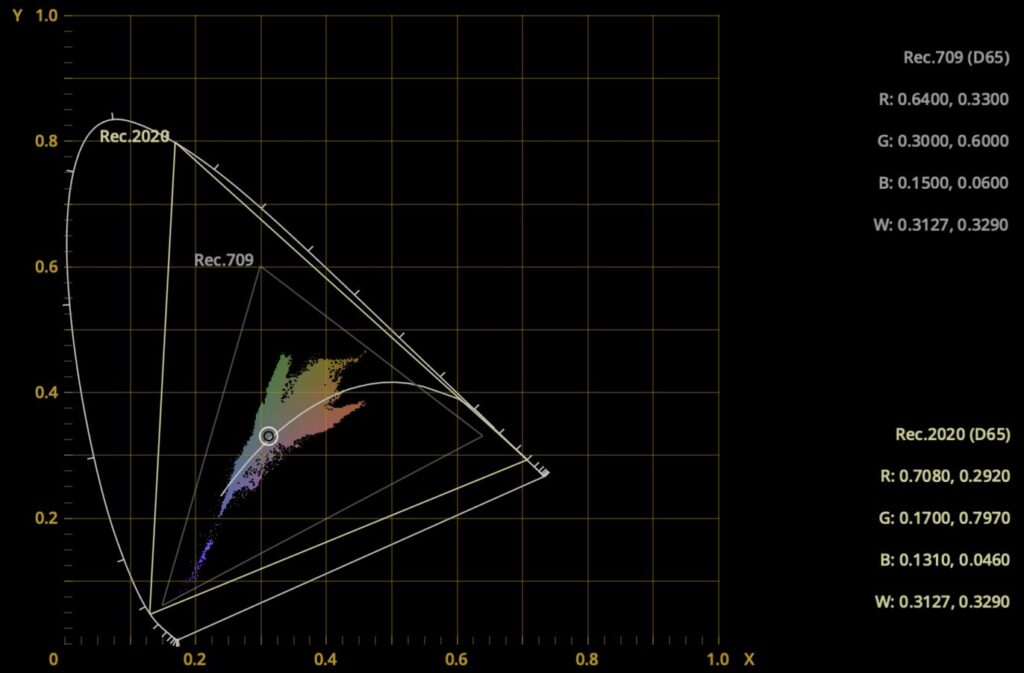

Stuck in SDR Color space

The game’s art style is very colorful and bright, however the game makes no use of the extended color gamut afforded by Rec.2020. Instead all colors are clamped in Rec.709, the same color space you get when in SDR. This is easily seen by just analzing the color gamut from the HDR capture and seeing that no pixel values fall in the extended color gamut triangle.

You might look at the HDR representations of this game and think “Wait, the game appears more colorful” and this is because of the Hunt Effect. The Hunt Effect describes how we think a brighter color is more saturated, but in reality, it’s just an optical illusion. This means Nintendo has left another key piece of HDR on the table likely due to their focus on creating the game for SDR first. Their artists are missing out on bright saturated colors, like UI elements, Sparks that fly off the tires, and explosions that could be directed to pop more if they chose to.

By Tom Axford 1 – Own work, building on this spectrum, CC BY-SA 4.0, https://commons.wikimedia.org/w/index.php?curid=124441017

Subjective Competitive Analysis

Just looking at other gameplay content that I’ve mastered before in HDR, wide color gamut can be used to help enhance the Red Sands in this Unreal demo called Rural Australia. I used this several times to showcase how extended color gamut made a large difference in the kinds of visuals you could achieve, even in a desert where you might think extended color gamut wasn’t really necessary.

Putting these two gameplay clips side by side showcases what you’re missing when you don’t utilize wide color gamut, and high peak brightness. You can more easily call out specific details, or emphasize colorful elements. It’s more stimulating, and in general your consumers will think your game looks better. If this developer could do this level of work in 2 months, imagine what your game would look like if you built for HDR in the first place.

YouTube Link if high quality video above doesn’t work

Why Developers Do This: The SDR First Pipeline Trap

Most teams still:

- Author lighting in sRGB / Rec. 709 Often not viewing HDR until it’s too late.

- Hold daily art reviews in SDR. Locking in their 0-1 range in Rec.709 making moving to wider color gamut requiring lots of rework.

- During Production lots of art time is spent mapping the final FP16 image into SDR.

- Then it’s thrown over the fence to rendering engineers and technical artists to make the SDR image look good in HDR. I hope 2-4 weeks is enough to polish the whole HDR game!

I get it. Developers have been living the last 30+ years of their life in SDR. Moving to HDR requires different practices, and some re-learning. But when Gamers in ESA surveys report that the quality of the graphics being the #2 factor in deciding when to purchase a game, you better maximize your HDR rendering, as you can increase the visual quality of your game without having to re-author new assets if done correctly.

In my experience once artists view their game in HDR, major art issues surface. It’s not that HDR creates these issues, but it reveals things that would normally be hidden by lower dynamic range and lower color volume like the banding issue above. Technical Artists have to compromise, usually in a rush by making a single static tonemapping curve for HDR, and lock the color gamut to Rec.709 intentionally bringing their game look closer to SDR.

On paper this ticks the HDR box with minimal risk, but why even bother spending the time on HDR if you’re going to make it look like a stretched version of SDR? TVs already do this by extending maximum brightness, and they do it in a way the user typically prefers. TV manufacturers have gotten really good at making SDR images stretched into pseudo HDR as they’ve been doing this for decades now.

PC gamers have been complaining about these HDR issues for years now. Check any Reddit forum or YouTuber complaining about Fake HDR. Even Digital Trends called static HDR “sad, misleading, and embarrassing”. We can do better…

Why It Matters in 2025

- Consumer hardware is good, really good. – Even mid-range TVs now exceed 1,000 nits and ship with per-frame dynamic tone mapping, Dolby Vision, and HDR10+ capabilities.

- Competitive titles are raising the bar – Games like Call of Duty Warzone, and Godfall: Ultimate Edition are exceeding 1,000 nits peak brightness and have wider color gamuts. I know, I helped them achieve these goals.

- HDR is mainstream – From just a quick browsing of BestBuy, nearly all TVs over 42” are 4K and support HDR. 9th gen consoles are shipping with HDR on by default. The majority of your audience is HDR-equipped.

- Visuals suffer – Working SDR first, limits your maximum brightness, limits your color gamut, and limits the impact your wow moments of a sunset, or a truly scary dimly lit dungeon.

What Can Developers Do?

Developers, specifically art directors and rendering programmers need to take HDR seriously from the beginning.

- Commit to upgrading your pipelines to Wide Color Gamut at the start

- Not all your textures need to be WCG, but you can have a checkbox for textures that exceed rec.709 so they can be processed by your crunching tools.

- Too deep into production to make major pipeline changes? Many Developers allow for WCG in their VFX only by using numbers over 1.0 while assuming all their textures are sRGB/Rec.709. This works very well as a first step that doesn’t require you ripping up your texture pipeline but delivering WCG in areas where it has the highest impact like VFX.

- Allow your game to have dynamic Range

- As a rule of thumb I like to set my high noon daylight scenes to be at least 5x brighter than nighttime at a minimum. Try it out on a reference monitor and bring in all your environment artists and ask which one looks better.

- Typically you can leave your SDR scenes for nighttime untouched. These will hit about 50-100 nits brightness.

- That means cranking up the sun intensity of your outdoor scenes by about 5x gets you into a ballpark that most embedded tone mappers in TVs can play along with.

- VFX needs to be adjusted, make standards for how bright of values your VFX artists should use for emissives like fire, sparks, gunshots, pickups etc. Don’t let obvious things like the blast from a gun be brighter than the sun.

- Build a Dynamic Tone Mapping service, or utilize ones by Dolby and HDR10+

- Because not every display will be capable of hitting 2000+ nits, you should add your own dynamic tone mapping for the best results.

- Remove “Auto Iris” or “Automatic Exposure Adjustments” from your game. Let the dynamic tone mapper adjust these based off the user’s display.

- This will prevent pulsing on higher end displays, but still ensure that folks on HDR displays that may only peak at 200-300 nits still get a good experience.

- Dolby Handles tone mapping down to SDR and has trim controls to adjust it. I know I’m biased towards that solution because I worked on it. Ask developers who have used it how it helped them if you want more data points.

- Make HDR review the standard

- Supply calibrated HDR monitors to art/lighting. Get more in your studio if budget allows for it.

- Do your art reviews on calibrated HDR displays.

- HDR offers fantastic dynamic range, color, and creative freedom for your images to look like whatever you want when done well. If SDR looks disappointing compared to HDR then you’re doing it right. (Seriously, do not let your art director reduce the quality of your HDR image in some attempt to make SDR look better, point them to this article or get on a call with them.)

If you’re Unreal based, I shipped plugins add Dolby Vision to Unreal Engine projects, even ones designed just for SDR. It solves many of these problems if you’re a smaller team without rendering engineering support. However, you’ll need to contact Dolby to get access to these plugins at the moment.

What Mario Kart World Could Have Looked like…

While the tone mapping for the game is baked in pretty tough, I did some color grading in Davinci Resolve by eliminating the 1000 nit ceiling and letting it go to 2000 nits. Clouds do look much better and more realistic; however detail is still lacking in those areas. I can’t magically paint on new detail in an afternoon.

I do like the roads and the characters being around 90-110 nits on a high noon sunny day. This feels right to me, but the VFX isn’t bright enough. Because of this they don’t pop and give you the feedback that you’re hitting a blue spark on your drift. Some of the UI elements that should stick out, like a 4,000 nit bright VFX pop when you pick up something. It’s all of these elements that make your game look more impressive even though you’re utilizing the same assets. Experiment with higher brightness and wider color gamut, You can achieve stunning results you just couldn’t achieve in SDR.

You’re already making your game in HDR and utilizing FP16 linear. Don’t just throw the dynamic range all away at the end because you chose to be SDR first.

Conclusion

Mario Kart World reveals that even the highest caliber of developers aren’t taking HDR seriously. The root cause is SDR first workflows that tack on a static tone mapper. Players lose, artist intent is capped, and a flagship hardware features are squandered.

If you’re shipping this year and want to avoid a similar post-mortem, let’s talk. I consult studios of any size on HDR first rendering pipelines, Dolby Vision integration, and dynamic tone-mapping strategies. Reach out: alexander.mejia@human-interact.com.

The era of checkbox HDR is over—let’s build images that finally exploit the full HDR canvas.

Fantastic article! Just recently purchased an HDR capable QD-OLED display and it’s been eye opening to see just how impressive a game with effective HDR implementation can look and conversely how underwhelming a game that does it poorly looks in contrast. For a technology so readily available there is a ton that seems to be misunderstood still.

Yes, it’s pretty amazing when you see a great piece of content in HDR. Going back to SDR or even SDR that’s been inverse tone mapped into HDR feels like looking at something from last decade.

Thanks for this analysis. Having played several hours now on an LG CX with HGIG enabled, I agree with your overall conclusions. The HDR picture feels too bright overall and doesn’t have much in the way of true dynamic range.

What hardware did you use to capture the HDMI output?

I used a Blackmagic Ultrastudio 4K Mini. For some reason the console will not output 4:2:2 to my Shogun Inferno, so I had to just capture ProRes4444 straight into my Mac Studio.

Great article, thanks !

So, do you recommend turning off HDR for Mario Kart World?

Is it better in SDR?

Thank you for the article! Would you suggest going to SDR in the meantime given the current HDR implementation on Switch2? While MarioKart definitely shows it, the Zelda titles that were given switch 2 editions were even worse in this regard.

It all depends on your preference at this point, It’s not going to present a better image than SDR is capable of, so it’s worth trying both to see which one fits your preference. Some HDR TVs display SDR content like this better, and vice versa.

Can you confirm if there’s any game on the Switch 2 that’s utilizing a wide color gamut? To my eye, Breath of the Wild and Tears of the Kingdom are NOT. But that’s just a guess from using my LG G4.

I would need to do more testing. I’ve only had the time to test one game, but most devs are still in rec.709 at the moment.

It looks to me like Donkey Kong Bananza is using wide color gamut. The colors are super saturated, and if I take a screenshot, the screenshot very much loses that saturation (screenshots are SDR/sRGB only, and it’s easy to tell).

I think not many people agree on “why it matters in 2025”.

HDR10 is a mess. Where’s DisplayHDR 1000 certified monitors? Sadly, even 1000 nits itself is like a pipe dream.

Look around, so many bad implementations.

But I don’t understand why Nintendo did this very naive HDR. Are they trying to test whether HDR is even worth it or not?

DisplayHDR1000 certification sets minimum specifications on the capabilities of the display, and on-paper should deliver a good HDR experience, assuming content is mastered correctly, and the tonemapping is worked out. Some displays do their own tone mapping, and most games do some level of source based tone mapping (even if static, like shown in Mario Kart World). Either way, there are still ways things can get messed up, for example, a double tone map, where the game tone maps to a specific metric, and then the display applies it’s secondary tone map again.

When I worked on Dolby Vision, we solved this problem. A Dolby Vision game can tell the monitor to go into a Dolby Vision game mode, which does not do that secondary tone map, It also communicates to the game the capabilities of that display, so the user wouldn’t have to touch a calibration slider (and most don’t in my experience). This is truly a zero-config experience for HDR for users.

Great article Alexander! Very thorough, well explained and good call to action. I’ve been experiencing similar problems with projects over the past few years.

Would you be able to expand on “Remove “Auto Iris” or “Automatic Exposure Adjustments” from your game. Let the dynamic tone mapper adjust these based off the user’s display.”. I don’t quite follow here? If there is no dynamic metadata for certain HDR10 monitors how would this work?

Thanks

Think about Auto Iris and Automatic Exposure Adjustments as a form of source based tone mapping. You’re still taking very bright values and either clipping, limiting, or bringing those values down. However Doing this kind of source based tone mapping doesn’t make sense for users with 4,000 Nit Peak brightness displays vs, a User with a 300 nit peak brightness display. There are smarter ways than giving everybody the same tone map to improve image quality up and down the range of displays that exist in the wild, and those that will exist in the future.

Hi, what did you adjust the secondary brightness slider (one with Mario Wonder) to in the HDR settings? For my TV, its MaxTML caps around 2000 nits, and I found setting the secondary brightness slider to 5 notches above the lowest setting results in a pleasing look. By default, it’s set too high imo.

Secondly, is your conclusion that the HDR implementation is bad because it leaves headroom (WCG, Luminance > 950 nits) on the table? What are your thoughts on the current implementation given the color volume its restriced to?

I kept the secondary slider at default. I understand it variably changes as you set the max brightness. Either way I’m trying to take reference snapshots of how the console/game outputs, not how it should be tone mapped to any specific TV which is another set of variables that open up. In my experience few gamers ever adjust more than one slider, therefore the default is likely the image they determined should go to a reference monitor. Unless someone from Nintendo can tell me what their creative intent is, I have to operate off assumptions like these.

The reason why I state that No WCG and Luminance at 950 nits is sub-optimal is that the game’s art style could benefit and be enhanced. 1) This is a colorful racer, any sort of UI elements, or even bright paints on certain Karts could benefit going into WCG. 2) There is white clipping and lack of details in specular highlights like clouds in the sky which lack detail. This is fine when you’re in SDR and you’re trying to create a punchy image, but this limitation doesn’t exist in HDR, so there’s no need to cap it for users who have better displays.

I appreciate the technical detail and effort into this post. Or, the author brings a lot of real-world experience to the table, which grounds the measurements they’ve done. having concrete takeaways for us in the industry is a huge benefit. I hope the ideas shared here make their ways into preproduction meetings happening today. I see a lot of “ugh” like this author describes.

But to play the devil’s advocate for a moment, I’ve played games with good HDR implementations (including ones the author has worked on) on nice displays, but I don’t know if it’s necessarily been more visually moving than a well-executed SDR game. Citizen Kane’s cinematography is still a treat even in black and white.

This makes me think that when the aforementioned survey respondents say they value good graphics, they’re speaking to something more nebulous than a rendering pipeline that’s handling a Wide Colour Gamut effectively. I’d like to posit that if developers want to make good HDR implementations a necessity, they’ll need to deliver something your layman will appreciate. Nintendo seems to suggesting that The Hunt Effect is working well enough for them these days.

This reminds me of drifting in QQ Speed

Great article!

What’s the reasoning behind capturing in limited range? Wouldn’t that impact the overall contrast?

It’s easier to go into ProRes without dealing with signal levels, and yes there are some code values lost, but I’ve not experienced any meaningful differences in my past. I could go back and get a full range capture, but it’s likely going to open up the signal another 20 or 30 code values because the dynamic range is already very compressed.

I’m curious what settings you recommend on the paper white page. I’ve noticed some games have an even worse HDR implementation, leaving a really dim washed out picture (Super Mario Odyssey). Whereas Mario Kart World though not perfect, looks leaps brighter and dynamic. I feel with Odyssey I have to increase paper white way past 200 nits to have a decent picture, dropping it back down in better implemented games like MKW. I’d love to see an analysis on other games like Odyssey or BOTW/TOTK from you.

It’s going to depend on your TV. Some TVs that are really low nit output actually have better SDR output than HDR for most games. I talk about this in the article that TVs have been determining how to stretch SDR signals for decades now. Either way, this game is likely to look it’s best in HDR or SDR, you just have to run tests and determine which you like better, if there’s any difference you can discern at all.